We have released a new technical report “Detecting ICMP Rate Limiting in the Internet” as an ISI technical report ISI-TR-717.

From the abstract of our technical report:

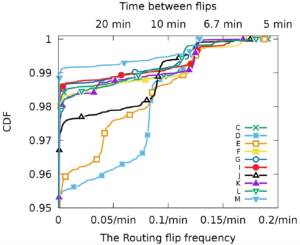

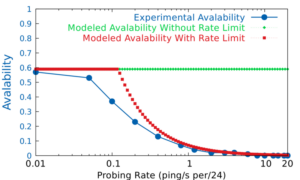

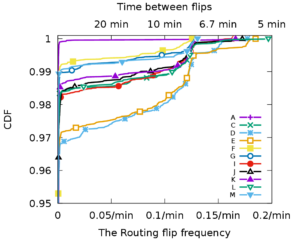

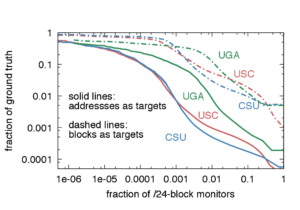

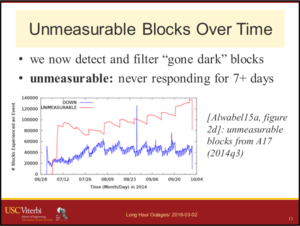

Active probing with ICMP is the center of many network measurements, with tools like ping, traceroute, and their derivatives used to map topologies and as a precursor for security scanning. However, rate limiting of ICMP traffic has long been a concern, since undetected rate limiting to ICMP could distort measurements, silently creating false conclusions. To settle this concern, we look systematically for ICMP rate limiting in the Internet. We develop a model for how rate limiting affects probing, validate it through controlled testbed experiments, and create FADER, a new algorithm that can identify rate limiting from user-side traces with minimal requirements for new measurement traffic. We validate the accuracy of FADER with many different network configurations in testbed experiments and show that it almost always detects rate limiting. Accuracy is perfect when measurement probing ranges from 0 to 60 times the rate limit, and almost perfect (95%) with up to 20% packet loss. The worst case for detection is when probing is very fast and blocks are very sparse, but even there accuracy remains good (measurements 60 times the rate limit of a 10% responsive block is correct 65% of the time). With this confidence, we apply our algorithm to a random sample of whole Internet, showing that rate limiting exists

but that for slow probing rates, rate-limiting is very, very rare. For our random sample of 40,493 /24 blocks (about 2\% of the responsive space), we confirm 6 blocks (0.02%!) see rate limiting

at 0.39 packets/s per block. We look at higher rates in public datasets

and suggest that fall-off in responses as rates approach 1 packet/s per /24 block (14M packets/s from the prober to the whole Internet),

is consistent with rate limiting. We also show that even very slow probing (0.0001 packet/s) can encounter rate limiting of NACKs that are concentrated at a single router near the prober.

Datasets we used in this paper are all public. ISI Internet Census and Survey data (including it71w, it70w, it56j, it57j and it58j census and survey) are available at https://ant.isi.edu/datasets/index.html. ZMap 50-second experiments data are from their WOOT 14 paper and can be obtained from ZMap authors upon request.

This technical report is joint work of Hang Guo and John Heidemann from USC/ISI.

![A slide from the [Heidemann17a] talk, looking at what different DNS stakeholders may want.](https://ant.isi.edu/blog/wp-content/uploads/2017/02/Heidemann17a_icon-300x213.png)