We are happy to announce a new project, Detecting, Interpreting, and Validating from Outside, In, and Control, Disruptive Events (DIVOICE).

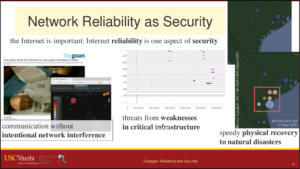

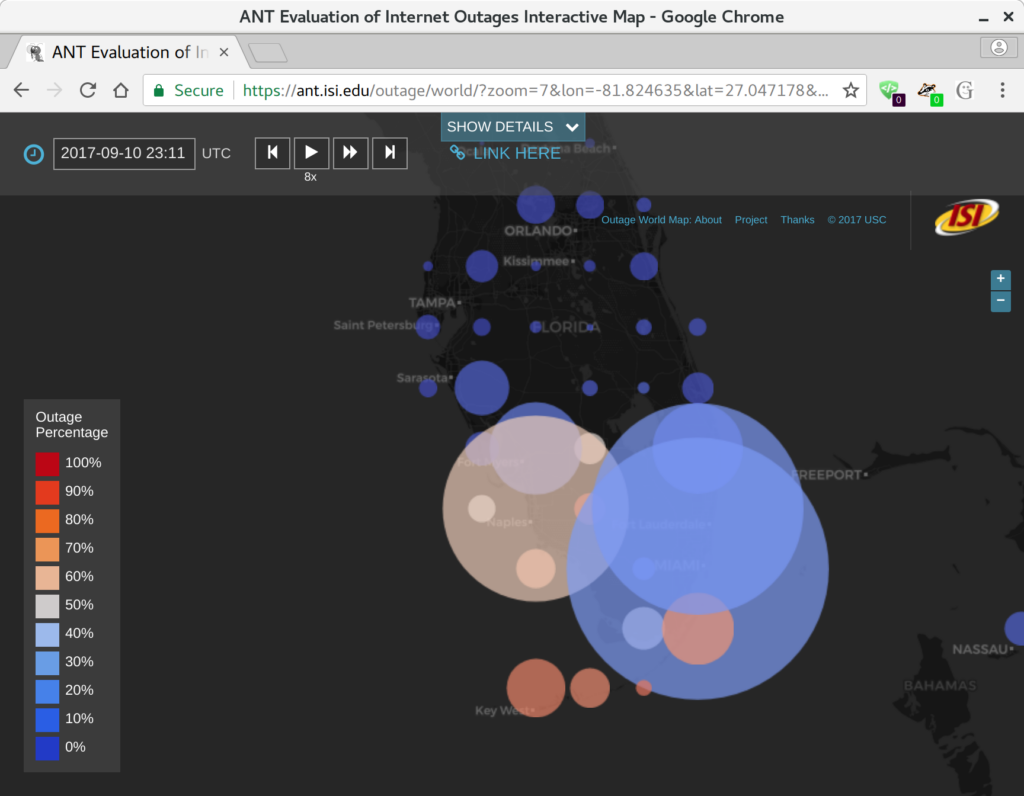

The DIVOICE project’s goal is to detect and understand Network/Internet Disruptive Events (NIDEs)—outages in the Internet.

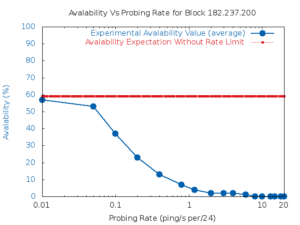

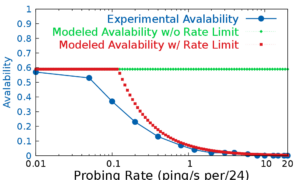

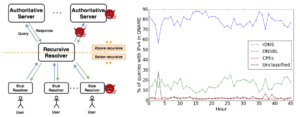

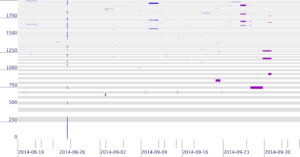

We will work toward this goal by examining outages at multiple levels of the network: at the data plane, with tools such as Trinocular (developed at USC/ISI) and Disco (developed at IIJ); at the control plane, with tools such as BGPMon (developed at Colorado State University); and at the application layer.

We expect to improve methods of outage detection, validate the work against each other and external sources of information, and work towards attribution of outage root causes.

DIVOICE is a joint effort of the ANT Lab involving USC/ISI (PI: John Heidemann) and Colorado State University (PI: Craig Partridge). DIVOICE builds on prior work on the LACANIC and Retro-Future Bridge and Outage projects. DIVOICE is supported by the DHS HSARPA Cyber Security Division via contract number 70RSAT18CB0000014.