I would like to congratulate Dr. Abdul Qadeer for defending his PhD at the University of Southern California in March 2021 and completing his doctoral dissertation “Efficient Processing of Streaming Data in Multi-User and Multi-Abstraction Workflows”.

From the abstract:

Ever-increasing data and evolving processing needs force enterprises to scale-out expensive computational resources to prioritize processing for timely results. Teams process their organization’s data either independently or using ad hoc sharing mechanisms. Often different users start with the same data and the same initial stages (decrypt, decompress, clean, anonymize). As their workflows evolve, later stages often diverge, and different stages may work best with different abstractions. The result is workflows with some overlap, some variations, and multiple transitions where data handling changes between continuous, windowed, and per-block. The system processing this diverse, multi-user, multi-abstraction workflow should be efficient and safe, but also must cope with fault recovery.

Analytics from multiple users can cause redundant processing and data, or encounter performance anomalies due to skew. Skew arises due to static or dynamic imbalance in the workflow stages. Both redundancy and skew waste compute resources and add latency to results. When users bridge between multiple abstractions, such as from per-block processing to windowed processing, they often employ custom code. These transitions can be error prone due to corner cases, can easily add latency as an inefficiency, and custom code is often a source of errors and maintenance difficulty. We need new solutions to manage the above challenges and to expose opportunities for data sharing explicitly. Our thesis is: new methods enable efficient processing of multi-user and multi-abstraction workflows of streaming data. We present two new methods for efficient stream processing—optimizations for multi-user workflows, and multiple abstractions for application coverage and efficient bridging.

These algorithms use a pipeline-graph to detect duplication of code and data across multiple users and cleanly delineate workflow stages for skew management. The pipeline-graph is our job description language that allows developers to specify their need easily and enables our system to automatically detect duplication and manage skew. The pipeline-graph acts as a shared canvas for collaboration amongst users to extend each other’s work. To efficiently implement our deduplication and skew management algorithms, we present streaming data to processing stages as fixed-sized but large blocks. Large-blocks have low meta-data overhead per user, provide good parallelism, and help with fault recovery.

Our second method enables applications to use a different abstraction on a different workflow stage. We provide three key abstractions and show that they cover many classes of analytics and our framework can bridge them efficiently. We provide Block-Streaming, Windowed-Streaming, and Stateful-Streaming abstractions. Block-Streaming is suitable for single-pass applications that care about temporal or spatial locality. Windowed-Streaming allows applications to process accumulated data (time-aligned blocks to sync with external information) and reductions like summation, averages, or other MapReduce-style analytics. We believe our three abstractions allow many classes of analytics and enable processing of one block, many blocks, or infinite stream. Plumb allows multiple abstractions in different parts of the workflow and provides efficient bridging between them so that users could make complex analytics from individual stages without worrying about data movement.

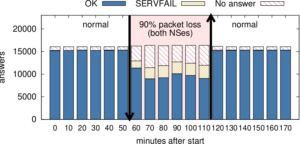

Our methods aim for good throughput, low latency, and clean and easy-to-use support for more applications to achieve better efficiency than our prior hand-tuned but often brittle system. The Plumb framework is the implementation of our solutions and a testbed to validate them. We use real-world workloads from the B-Root DNS domain to demonstrate effectiveness of our solutions. Our processing deduplication increases throughput up to $6\times$, reduces storage by 75%, as compared to their pre-Plumb counterparts. Plumb reduces CPU wastage due to structural skew up to half and reduces latency due to computational skew by 50%. Plumb has cut per-block latency by 74% and latency of daily statistics by 97%, while reducing code size by 58% and lowering manual intervention to handle problems by 73% as compared to pre-Plumb system.

The operational use of Plumb for the B-Root service provides a multi-year validation of our design choices under many traffic conditions. Over the last three years, Plumb has processed more than 12PB of DNS packet data and daily statistics. We show that our abstractions apply to many applications in the domain of networking big-data and beyond.