We have published a new paper “Who Knocks at the IPv6 Door? Detecting IPv6 Scanning” by Kensuke Fukuda and John Heidemann, in the ACM Internet Measurements Conference (IMC 2018) in Boston, Mass., USA.

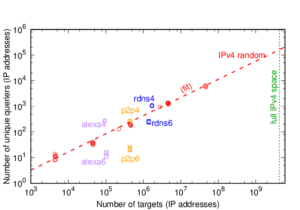

DNS backscatter detects internet-wide activity by looking for common reverse DNS lookups at authoritative DNS servers that are high in the DNS hierarchy. Both DNS backscatter and monitoring unused address space (darknets or network telescopes) can detect scanning in IPv4, but with IPv6’s vastly larger address space, darknets become much less effective. This paper shows how to adapt DNS backscatter to IPv6. IPv6 requires new classification rules, but these reveal large network services, from cloud providers and CDNs to specific services such as NTP and mail. DNS backscatter also identifies router interfaces suggesting traceroute-based topology studies. We identify 16 scanners per week from DNS backscatter using observations from the B-root DNS server, with confirmation from backbone traffic observations or blacklists. After eliminating benign services, we classify another 95 originators in DNS backscatter as potential abuse. Our work also confirms that IPv6 appears to be less carefully monitored than IPv4.

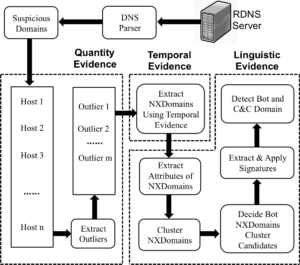

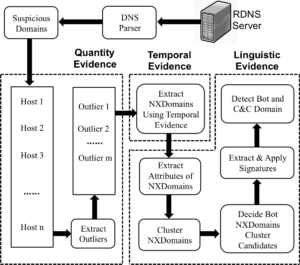

These limitations make it very hard to detect individual bots when using traffic collected from a single network. In this paper, we introduce BotDigger, a system that detects DGA-based bots using DNS traffic without a priori knowledge of the domain generation algorithm. BotDigger utilizes a chain of evidence, including quantity, temporal and linguistic evidence

These limitations make it very hard to detect individual bots when using traffic collected from a single network. In this paper, we introduce BotDigger, a system that detects DGA-based bots using DNS traffic without a priori knowledge of the domain generation algorithm. BotDigger utilizes a chain of evidence, including quantity, temporal and linguistic evidence