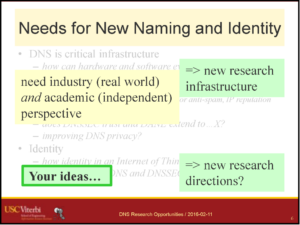

John Heidemann gave the talk “New Opportunities for Research and Experiments in Internet Naming And Identification” at the AIMS 2016 workshop at CAIDA, La Jolla, California on February 11, 2016. Slides are available at http://www.isi.edu/~johnh/PAPERS/Heidemann16a.pdf.

From the abstract:

DNS is central to Internet use today, yet research on DNS today is challenging: many researchers find it challenging to create realistic experiments at scale and representative of the large installed base, and datasets are often short (two days or less) or otherwise limited. Yes DNS evolution presses on: improvements to privacy are needed, and extensions like DANE provide an opportunity for DNS to improve security and support identity management. We exploring how to grow the research community and enable meaningful work on Internet naming. In this talk we will propose new research infrastructure to support to realistic DNS experiments and longitudinal data studies. We are looking for feedback on our proposed approaches and input about your pressing research problems in Internet naming and identification.

For more information see our project website.

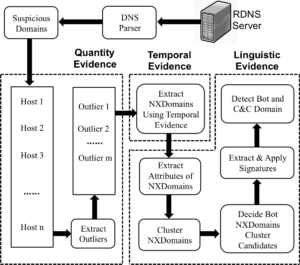

These limitations make it very hard to detect individual bots when using traffic collected from a single network. In this paper, we introduce BotDigger, a system that detects DGA-based bots using DNS traffic without a priori knowledge of the domain generation algorithm. BotDigger utilizes a chain of evidence, including quantity, temporal and linguistic evidence

These limitations make it very hard to detect individual bots when using traffic collected from a single network. In this paper, we introduce BotDigger, a system that detects DGA-based bots using DNS traffic without a priori knowledge of the domain generation algorithm. BotDigger utilizes a chain of evidence, including quantity, temporal and linguistic evidence