Our new paper “Deep Dive into NTP Pool’s Popularity and Mapping” will appear in the SIGMETRICS 2024 conference and concurrently in the ACM Proceedings of the ACM on Measurement and Analysis of Computing Systems, vol. 8, no. 1, March 2024.

From the abstract:

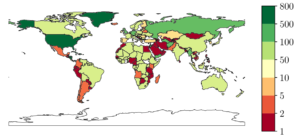

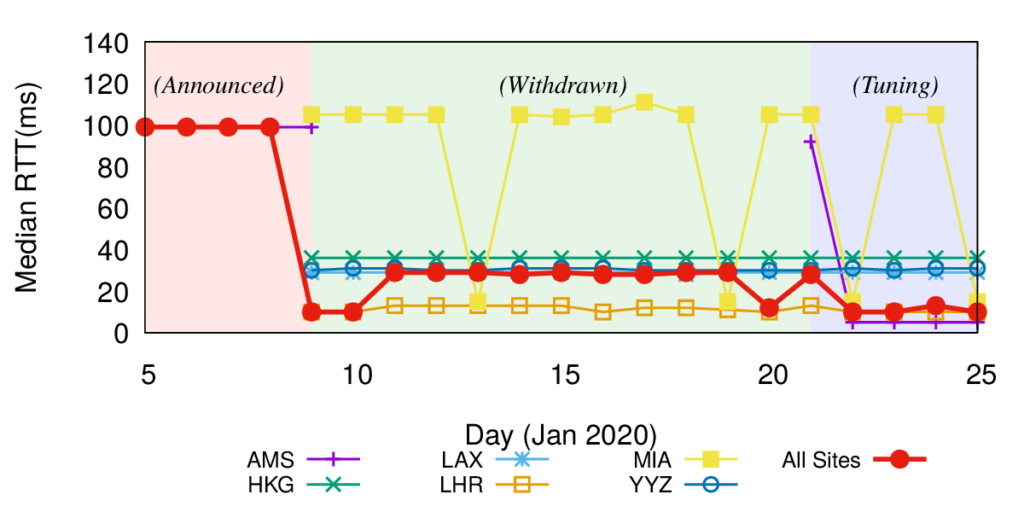

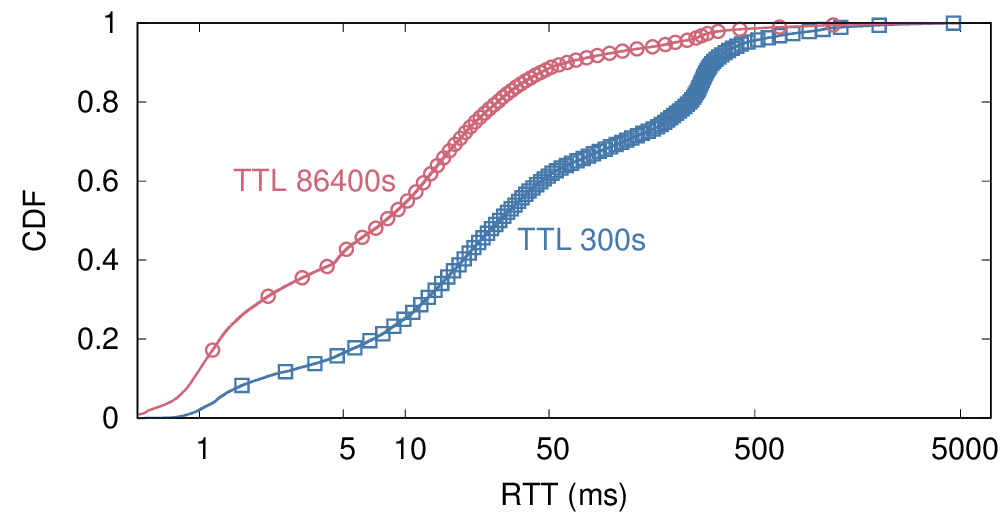

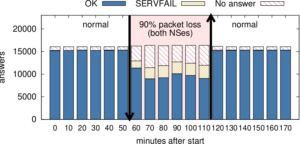

Time synchronization is of paramount importance on the Internet, with the Network Time Protocol (NTP) serving as the primary synchronization protocol. The NTP Pool, a volunteer-driven initiative launched two decades ago, facilitates connections between clients and NTP servers. Our analysis of root DNS queries reveals that the NTP Pool has consistently been the most popular time service. We further investigate the DNS component (GeoDNS) of the NTP Pool, which is responsible for mapping clients to servers. Our findings indicate that the current algorithm is heavily skewed, leading to the emergence of time monopolies for entire countries. For instance, clients in the US are served by 551 NTP servers, while clients in Cameroon and Nigeria are served by only one and two servers, respectively, out of the 4k+ servers available in the NTP Pool. We examine the underlying assumption behind GeoDNS for these mappings and discover that time servers located far away can still provide accurate clock time information to clients. We have shared our findings with the NTP Pool operators, who acknowledge them and plan to revise their algorithm to enhance security.

This paper is a joint work of

Giovane C. M. Moura1,2, Marco Davids1, Caspar Schutijser1, Christian Hesselman1,3, John Heidemann4,5, and Georgios Smaragdakis2 with 1: SIDN Labs, 2 Technical University, Delft, 3: the University of Twente, 4: the University of Southern California/Information Sciences Institute, 5: USC/Computer Science Dept. This work was supported by the RIPE NCC (via Atlas), the Root Operators and DNS-OARC (for DITL), SIDN Labs time.nl project, the Twente University Centre for Cyber Security Resarch, NSF projects CNS-2212480, CNS-2319409, the European Research Council ResolutioNet (679158), Duth 6G Future Network Services project, the EU programme Horizon Europe grants SEPTON (101094901), MLSysOps (101092912), and TANGO (101070052).

![[Moura16a] Figure 3](https://ant.isi.edu/blog/wp-content/uploads/2016/05/plot-letter-reachability-mp-scaledA-280x300.png)