John Heidemann gave the talk “Anycast Latency: How Many Sites are Enough?” at DNS-OARC in Dallas, Texas, USA on October 16, 2016. Slides are available at http://www.isi.edu/~johnh/PAPERS/Heidemann16b.pdf.

![Comparing actual (obtained) anycast latency against optimal possible anycast latency, for 4 different anycast deployments (each a Root Letter). From the talk [Heidemann16b], based on data from [Moura16b].](https://ant.isi.edu/blog/wp-content/uploads/2016/10/Heidemann16b_icon-300x212.png)

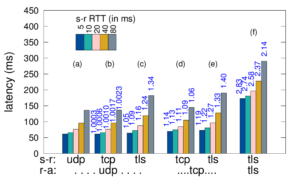

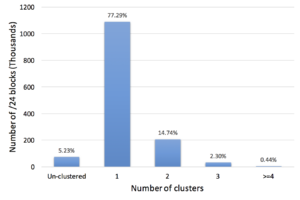

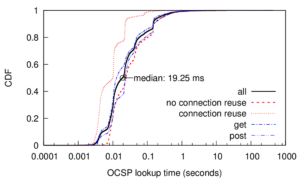

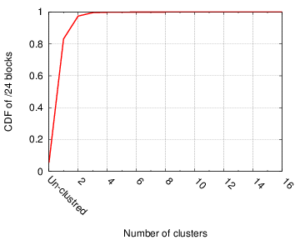

This talk will evaluate anycast latency. An anycast service uses multiple sites to provide high availability, capacity and redundancy, with BGP routing associating users to nearby anycast sites. Routing defines the catchment of the users that each site serves. Although prior work has studied how users associate with anycast services informally, in this paper we examine the key question how many anycast sites are needed to provide good latency, and the worst case latencies that specific deployments see. To answer this question, we must first define the optimal performance that is possible, then explore how routing, specific anycast policies, and site location affect performance. We develop a new method capable of determining optimal performance and use it to study four real-world anycast services operated by different organizations: C-, F-, K-, and L-Root, each part of the Root DNS service. We measure their performance from more than worldwide vantage points (VPs) in RIPE Atlas. (Given the VPs uneven geographic distribution, we evaluate and control for potential bias.) Key results of our study are to show that a few sites can provide performance nearly as good as many, and that geographic location and good connectivity have a far stronger effect on latency than having many nodes. We show how often users see the closest anycast site, and how strongly routing policy affects site selection.

This talk is based on the work in the technical report “Anycast Latency: How Many Sites Are Enough?” (ISI-TR-2016-708), by Ricardo de O. Schmidt, John Heidemann, and Jan Harm Kuipers.

Datasets from the paper are available at https://ant.isi.edu/datasets/anycast/

![[Schmidt16a] figure 4: distribution of measured latency (solid lines) to optimal possible latency (dashed lines) for 4 Root DNS anycast deployments.](https://ant.isi.edu/blog/wp-content/uploads/2016/05/plot-cdf-optimal-300x210.png)

![How newtork activity generates DNS backscatter that is visible at authority servers. (Figure 1 from [Fukuda15a]).](http://ant.isi.edu/blog/wp-content/uploads/2015/09/Fukuda15a_icon-300x190.png)