We have published a new paper “When the Dike Breaks: Dissecting DNS Defenses During DDoS” in the ACM Internet Measurements Conference (IMC 2018) in Boston, Mass., USA.

From the abstract:

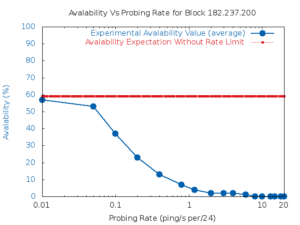

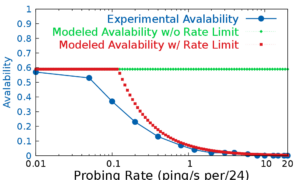

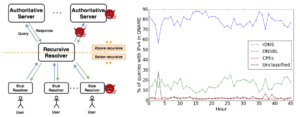

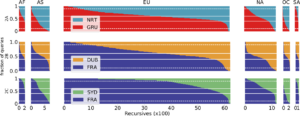

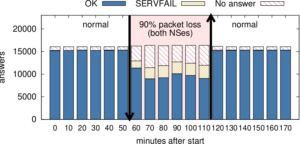

The Internet’s Domain Name System (DNS) is a frequent target of Distributed Denial-of-Service (DDoS) attacks, but such attacks have had very different outcomes—some attacks have disabled major public websites, while the external effects of other attacks have been minimal. While on one hand the DNS protocol is relatively simple, the \emph{system} has many moving parts, with multiple levels of caching and retries and replicated servers. This paper uses controlled experiments to examine how these mechanisms affect DNS resilience and latency, exploring both the client side’s DNS \emph{user experience}, and server-side traffic. We find that, for about 30\% of clients, caching is not effective. However, when caches are full they allow about half of clients to ride out server outages that last less than cache lifetimes, Caching and retries together allow up to half of the clients to tolerate DDoS attacks longer than cache lifetimes, with 90\% query loss, and almost all clients to tolerate attacks resulting in 50\% packet loss. While clients may get service during an attack, tail-latency increases for clients. For servers, retries during DDoS attacks increase normal traffic up to $8\times$. Our findings about caching and retries help explain why users see service outages from some real-world DDoS events, but minimal visible effects from others.

Datasets from this paper are available at no cost and are listed at https://ant.isi.edu/datasets/dns/#Moura18b_data.