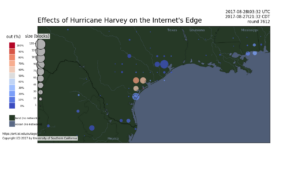

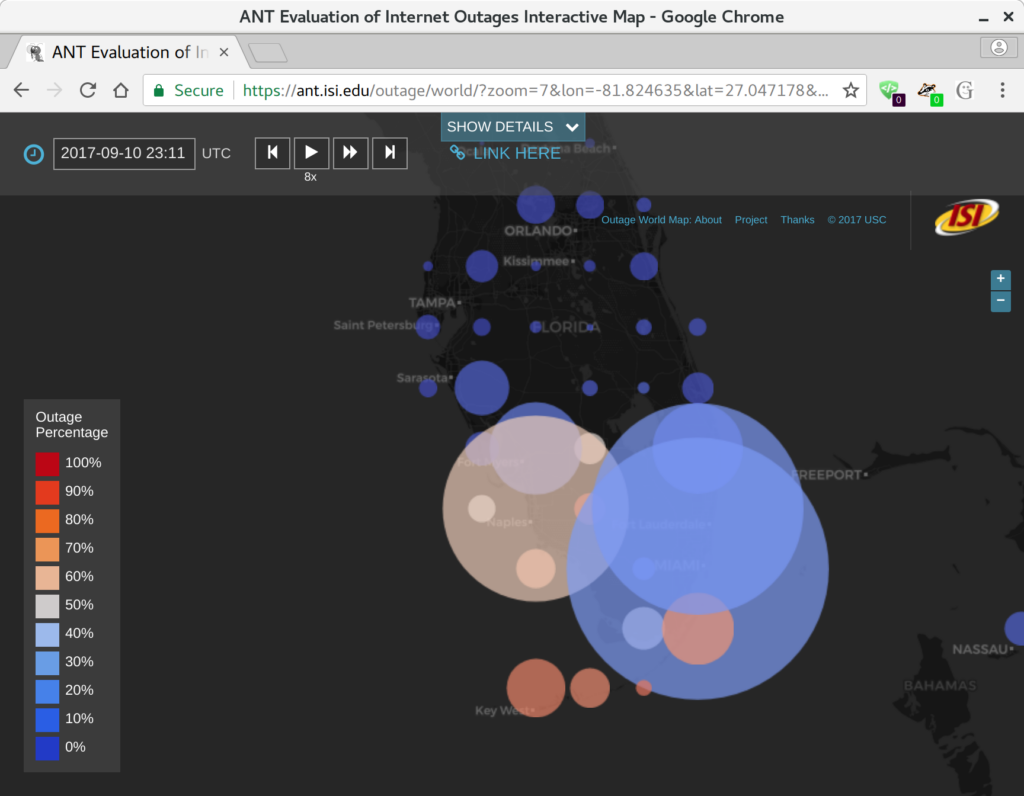

We are happy to announce a new website at https://ant.isi.edu/outage/world/ that supports our Internet outage data collected from Trinocular. (Update 2025: the website is now https://outage.ant.isi.edu/ )

Our website supports browsing more than two years of outage data, organized by geography and time. The map is a google-maps-style world map, with circle on it at even intervals (every 0.5 to 2 degrees of latitude and longitude, depending on the zoom level). Circle sizes show how many /24 network blocks are out in that location, while circle colors show the percentage of outages, from blue (only a few percent) to red (approaching 100%).

We hope that this website makes our outage data more accessible to researchers and the public.

The raw data underlying this website is available on request, see our outage dataset webpage.

The research is funded by the Department of Homeland Security (DHS) Cyber Security Division (through the LACREND and Retro-Future Bridge and Outages projects) and Michael Keston, a real estate entrepreneur and philanthropist (through the Michael Keston Endowment). Michael Keston helped support this the initial version of this website, and DHS has supported our outage data collection and algorithm development.

The website was developed by Dominik Staros, ISI web developer and owner of Imagine Web Consulting, based on data collected by ISI researcher Yuri Pradkin. It builds on prior work by Pradkin, Heidemann and USC’s Lin Quan in ISI’s Analysis of Network Traffic Lab.

ISI has featured our new website on the ISI news page.