We released a new technical report “LDplayer: DNS Experimentation at Scale”, ISI-TR-722, available at https://www.isi.edu/publications/trpublic/pdfs/ISI-TR-722.pdf.

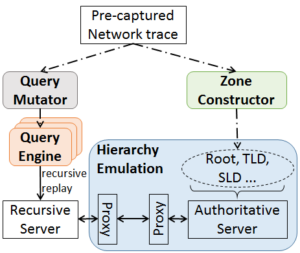

DNS has evolved over the last 20 years, improving in security and privacy and broadening the kinds of applications it supports. However, this evolution has been slowed by the large installed base with a wide range of implementations that are slow to change. Changes need to be carefully planned, and their impact is difficult to model due to DNS optimizations, caching, and distributed operation. We suggest that experimentation at scale is needed to evaluate changes and speed DNS evolution. This paper presents LDplayer, a configurable, general-purpose DNS testbed that enables DNS experiments to scale in several dimensions: many zones, multiple levels of DNS hierarchy, high query rates, and diverse query sources. LDplayer provides high fidelity experiments while meeting these requirements through its distributed DNS query replay system, methods to rebuild the relevant DNS hierarchy from traces, and efficient emulation of this hierarchy of limited hardware. We show that a single DNS server can correctly emulate multiple independent levels of the DNS hierarchy while providing correct responses as if they were independent. We validate that our system can replay a DNS root traffic with tiny error (+/- 8ms quartiles in query timing and +/- 0.1% difference in query rate). We show that our system can replay queries at 87k queries/s, more than twice of a normal DNS Root traffic rate, maxing out one CPU core used by our customized DNS traffic generator. LDplayer’s trace replay has the unique ability to evaluate important design questions with confidence that we capture the interplay of caching, timeouts, and resource constraints. As an example, we can demonstrate the memory requirements of a DNS root server with all traffic running over TCP, and we identified performance discontinuities in latency as a function of client RTT.

Software developed in this paper is available at https://ant.isi.edu/software/ldplayer/.