The paper “Detecting Malicious Activity with DNS Backscatter” will appear at the ACM Internet Measurements Conference in October 2015 in Tokyo, Japan. A copy is available at http://www.isi.edu/~johnh/PAPERS/Fukuda15a.pdf).

![How newtork activity generates DNS backscatter that is visible at authority servers. (Figure 1 from [Fukuda15a]).](http://ant.isi.edu/blog/wp-content/uploads/2015/09/Fukuda15a_icon-300x190.png)

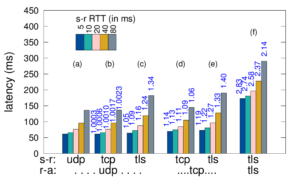

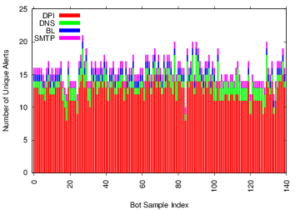

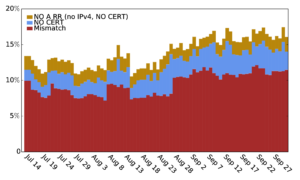

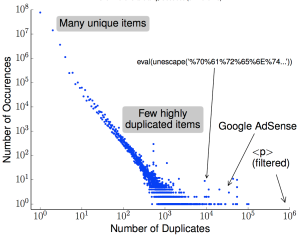

Network-wide activity is when one computer (the originator) touches many others (the targets). Motives for activity may be benign (mailing lists, CDNs, and research scanning), malicious (spammers and scanners for security vulnerabilities), or perhaps indeterminate (ad trackers). Knowledge of malicious activity may help anticipate attacks, and understanding benign activity may set a baseline or characterize growth. This paper identifies DNS backscatter as a new source of information about network-wide activity. Backscatter is the reverse DNS queries caused when targets or middleboxes automatically look up the domain name of the originator. Queries are visible to the authoritative DNS servers that handle reverse DNS. While the fraction of backscatter they see depends on the server’s location in the DNS hierarchy, we show that activity that touches many targets appear even in sampled observations. We use information about the queriers to classify originator activity using machine-learning. Our algorithm has reasonable precision (70-80%) as shown by data from three different organizations operating DNS servers at the root or country-level. Using this technique we examine nine months of activity from one authority to identify trends in scanning, identifying bursts corresponding to Heartbleed and broad and continuous scanning of ssh.

The work in this paper is by Kensuke Fukuda (NII/Sokendai) and John Heidemann (USC/ISI) and was begun when Fukuda-san was a visiting scholar at USC/ISI. Kensuke Fukuda’s work in this paper is partially funded by Young Researcher Overseas Visit Program by Sokendai, JSPS Kakenhi, and the Strategic International Collaborative R&D Promotion Project of the Ministry of Internal Affairs and Communication in Japan, and by the European Union Seventh Framework Programme. John Heidemann’s work is partially supported by US DHS S&T, Cyber Security division.

Some of the datasets in this paper are available to researchers, either from the authors or through DNS-OARC. We list DNS backscatter datasets and methods to obtain them at https://ant.isi.edu/datasets/dns_backscatter/index.html.

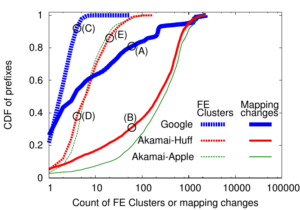

Large web services employ CDNs to improve user performance. CDNs improve performance by serving users from nearby FrontEnd (FE) Clusters. They also spread users across FE Clusters when one is overloaded or unavailable and others have unused capacity. Our paper is the first to study the dynamics of the user-to-FE Cluster mapping for Google and Akamai from a large range of client prefixes. We measure how 32,000 prefixes associate with FE Clusters in their CDNs every 15 minutes for more than a month. We study geographic and latency effects of mapping changes, showing that 50–70% of prefixes switch between FE Clusters that are very distant from each other (more than 1,000 km), and that these shifts sometimes (28–40% of the time) result in large latency shifts (100 ms or more). Most prefixes see large latencies only briefly, but a few (2–5%) see high latency much of the time. We also find that many prefixes are directed to several countries over the course of a month, complicating questions of jurisdiction.

Large web services employ CDNs to improve user performance. CDNs improve performance by serving users from nearby FrontEnd (FE) Clusters. They also spread users across FE Clusters when one is overloaded or unavailable and others have unused capacity. Our paper is the first to study the dynamics of the user-to-FE Cluster mapping for Google and Akamai from a large range of client prefixes. We measure how 32,000 prefixes associate with FE Clusters in their CDNs every 15 minutes for more than a month. We study geographic and latency effects of mapping changes, showing that 50–70% of prefixes switch between FE Clusters that are very distant from each other (more than 1,000 km), and that these shifts sometimes (28–40% of the time) result in large latency shifts (100 ms or more). Most prefixes see large latencies only briefly, but a few (2–5%) see high latency much of the time. We also find that many prefixes are directed to several countries over the course of a month, complicating questions of jurisdiction.

![Predicting longitude from observed diurnal phase ([Quan14c], figure 14c)](http://ant.isi.edu/blog/wp-content/uploads/2014/10/Quan14c_icon-300x175.png)

![Comparing observed diurnal phase and geolocation longitude for 287k geolocatable, diurnal blocks ([Quan14b], figure 14b)](http://ant.isi.edu/blog/wp-content/uploads/2014/05/Quan14b_icon-300x210.png)