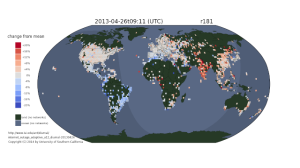

Does the Internet sleep? Yes, and we have the video!

We have recently put together a video showing 35 days of Internet address usage as observed from Trinocular, our outage detection system.

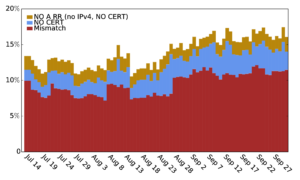

The Internet sleeps: address use in South America is low (blue) in the early morning, while India is high (red) in afternoon. When we look at address usage over time, we see that some parts of the globe have daily swings of +/-10% to 20% in the number of active addresses. In China, India, eastern Europe and much of South America, the Internet sleeps.

Understanding when the Internet sleeps is important to understand how different country’s network policies affect use, it is part of outage detection, and it is a piece of improving our long-term goal of understanding exactly how big the Internet is.

See http://www.isi.edu/ant/diurnal/ for the video, or read our technical paper “When the Internet Sleeps: Correlating Diurnal Networks With External Factors” by Quan, Heidemann, and Pradkin, to appear at ACM IMC, Nov. 2014.

Datasets (listed here) used in generating this video are available.

This work is partly supported by DHS S&T, Cyber Security division, agreement FA8750-12-2-0344 (under AFRL) and N66001-13-C-3001 (under SPAWAR). The views contained

herein are those of the authors and do not necessarily represent those of DHS or the U.S. Government. This work was classified by USC’s IRB as non-human subjects research (IIR00001648).

![Predicting longitude from observed diurnal phase ([Quan14c], figure 14c)](http://ant.isi.edu/blog/wp-content/uploads/2014/10/Quan14c_icon-300x175.png)

![Comparing observed diurnal phase and geolocation longitude for 287k geolocatable, diurnal blocks ([Quan14b], figure 14b)](http://ant.isi.edu/blog/wp-content/uploads/2014/05/Quan14b_icon-300x210.png)