I would like to congratulate Dr. Lin Quan for defending his PhD in Dec. 2013 and his doctoral disseration “Learning about the Internet through Efficient Sampling and Aggregation” in Jan. 2014.

From the abstract:

The Internet is important for nearly all aspects of our society, affecting ordinary people, businesses, and social activities. Because of its importance and wide-spread applications, we want to have good knowledge about Internet’s operation, reliability and performance, through various kinds of measurements. However, despite the wide usage, we only have limited knowledge of its overall performance and reliability. The first reason of this limited knowledge is that there is no central governance of the Internet, making both active and passive measurements hard. The second reason is the huge scale of the Internet. This makes brute-force analysis hard because of practical computing resource limits such as CPU, memory and probe rate.

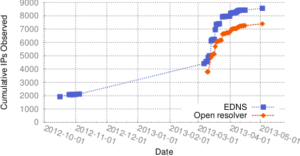

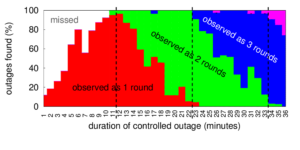

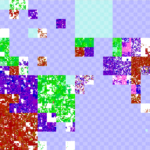

This thesis states that sampling and aggregation are necessary to overcome resource constraints in time and space to learn about better knowledge of the Internet. Many other Internet measurement studies also utilize sampling and aggregation techniques to discover properties of the Internet. We distinguish our work by exploring novel mechanisms and new knowledge in several specific areas. First, we aggregate short-time-scale observations and use an efficient multi-time-scale query scheme to discover the properties and reasons of long-lived Internet flows. Second, we sample and probe /24 blocks in the IPv4 address space, and use greedy clustering algorithms to efficiently characterize Internet outages. Third, we show an efficient and effective aggregation technique by visualization and clustering. This technique makes both manual inspection and automated characterization easier. Last, we develop an adaptive probing system to study global scale Internet reliability. It samples and adapts probe rate within each /24 block for accurate beliefs. By aggregation and correlation to other domains, we are also able to study broader policy effects on Internet use, such as political causes, economic conditions, and access technologies.

This thesis provides several examples of Internet knowledge discovery with new mechanisms of sampling and aggregation techniques. We believe our approaches of new sampling and aggregation mechanisms can be used by and will inspire new ways for future Internet measurement systems to overcome resource constraints, such as large amount and dispersed data.

![[Calder13a] figure 5a](http://ant.isi.edu/blog/wp-content/uploads/2013/10/Calder13a_icon-300x156.png)