We released a new technical report “LDplayer: DNS Experimentation at Scale (abstract with poster)”, ISI-TR-721, available at https://www.isi.edu/publications/trpublic/pdfs/ISI-TR-721.pdf.

The poster abstract and poster (included as part of the technical report) appeared at the poster session at the SIGCOMM 2017 in August 2017 in Los Angeles, CA, USA.

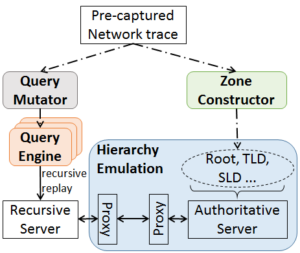

In the last 20 years the core of the Domain Name System (DNS) has improved in security and privacy, and DNS use broadened from name-to-address mapping to a critical roles in service discovery and anti-spam. However, protocol evolution and expansion of use has been slow because advances must consider a huge and diverse installed base. We suggest that experimentation at scale can fill this gap. To meet the need for experimentation at scale, this paper presents LDplayer, a configurable, general-purpose DNS testbed. LDplayer enables DNS experiments to scale in several dimensions: many zones, multiple levels of DNS hierarchy, high query rates, and diverse query sources. To meet these requirements while providing high fidelity experiments, LDplayer includes a distributed DNS query replay system and methods to rebuild the relevant DNS hierarchy from traces. We show that a single DNS server can correctly emulate multiple independent levels of the DNS hierarchy while providing correct responses as if they were independent. We show the importance of our system to evaluate pressing DNS design questions, using it to evaluate changes in DNSSEC key size.

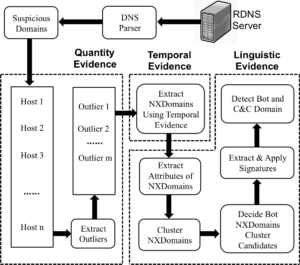

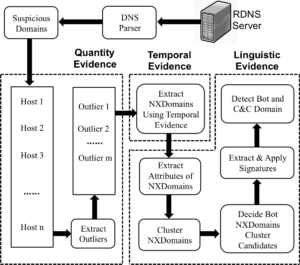

These limitations make it very hard to detect individual bots when using traffic collected from a single network. In this paper, we introduce BotDigger, a system that detects DGA-based bots using DNS traffic without a priori knowledge of the domain generation algorithm. BotDigger utilizes a chain of evidence, including quantity, temporal and linguistic evidence

These limitations make it very hard to detect individual bots when using traffic collected from a single network. In this paper, we introduce BotDigger, a system that detects DGA-based bots using DNS traffic without a priori knowledge of the domain generation algorithm. BotDigger utilizes a chain of evidence, including quantity, temporal and linguistic evidence