We have published a new conference “Detecting ICMP Rate Limiting in the Internet” in PAM 2018 (the Passive and Active Measurement Conference) in Berlin, Germany.

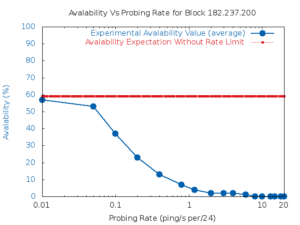

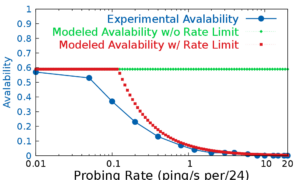

Comparing model and experimental effects of rate limiting (Figure 4 from [Guo18a] )

ICMP active probing is the center of many network measurements. Rate limiting to ICMP traffic, if undetected, could distort measurements and create false conclusions. To settle this concern, we look systematically for ICMP rate limiting in the Internet. We create FADER, a new algorithm that can identify rate limiting from user-side traces with minimal new measurement traffic. We validate the accuracy of FADER with many different network configurations in testbed experiments and show that it almost always detects rate limiting. With this confidence, we apply our algorithm to a random sample of the whole Internet, showing that rate limiting exists but that for slow probing rates, rate-limiting is very rare. For our random sample of 40,493 /24 blocks (about 2% of the responsive space), we confirm 6 blocks (0.02%!) see rate limiting at 0.39 packets/s per block. We look at higher rates in public datasets and suggest that fall-off in responses as rates approach 1 packet/s per /24 block is consistent with rate limiting. We also show that even very slow probing (0.0001 packet/s) can encounter rate limiting of NACKs that are concentrated at a single router near the prober.

Datasets we used in this paper are all public. ISI Internet Census and Survey data (including it71w, it70w, it56j, it57j and it58j census and survey) are available at https://ant.isi.edu/datasets/index.html. ZMap 50-second experiments data are from their WOOT 14 paper and can be obtained from ZMap authors upon request.

This conference report is joint work of Hang Guo and John Heidemann from USC/ISI.