The paper “Mapping the Expansion of Google’s Serving Infrastructure” (by Matt Calder, Xun Fan, Zi Hu, Ethan Katz-Bassett, John Heidemann and Ramesh Govindan) will appear in the 2013 ACM Internet Measurements Conference (IMC) in Barcelona, Spain in Oct. 2013.

This work was also featured today in Digits, the technology news and analysis blog from the Wall Street Journal, and at USC’s press room.

A copy of the paper is available at http://www.isi.edu/~johnh/PAPERS/Calder13a, and data from the work is available at http://mappinggoogle.cs.usc.edu, from http://www.isi.edu/ant/traces/mapping_google/index.html, and from http://www.predict.org.

![[Calder13a] figure 5a](http://ant.isi.edu/blog/wp-content/uploads/2013/10/Calder13a_icon-300x156.png)

From the paper’s abstract:

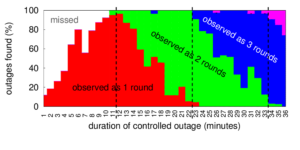

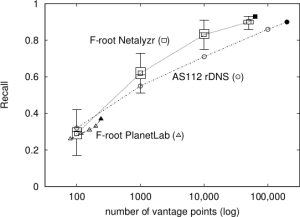

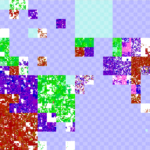

Modern content-distribution networks both provide bulk content and act as “serving infrastructure” for web services in order to reduce user-perceived latency. Serving infrastructures such as Google’s are now critical to the online economy, making it imperative to understand their size, geographic distribution, and growth strategies. To this end, we develop techniques that enumerate IP addresses of servers in these infrastructures, find their geographic location, and identify the association between clients and clusters of servers. While general techniques for server enumeration and geolocation can exhibit large error, our techniques exploit the design and mechanisms of serving infrastructure to improve accuracy. We use the EDNS-client-subnet DNS extension to measure which clients a service maps to which of its serving sites. We devise a novel technique that uses this mapping to geolocate servers by combining noisy information about client locations with speed-of-light constraints. We demonstrate that this technique substantially improves geolocation accuracy relative to existing approaches. We also cluster server IP addresses into physical sites by measuring RTTs and adapting the cluster thresholds dynamically. Google’s serving infrastructure has grown dramatically in the ten months, and we use our methods to chart its growth and understand its content serving strategy. We find that the number of Google serving sites has increased more than sevenfold, and most of the growth has occurred by placing servers in large and small ISPs across the world, not by expanding Google’s backbone.

![Visualization of low-rate periodicity, before and after installation of a keylogger. [Bartlett11a, figure 3]](http://ant.isi.edu/blog/wp-content/uploads/2011/04/Bartlett11a_icon-227x300.png)